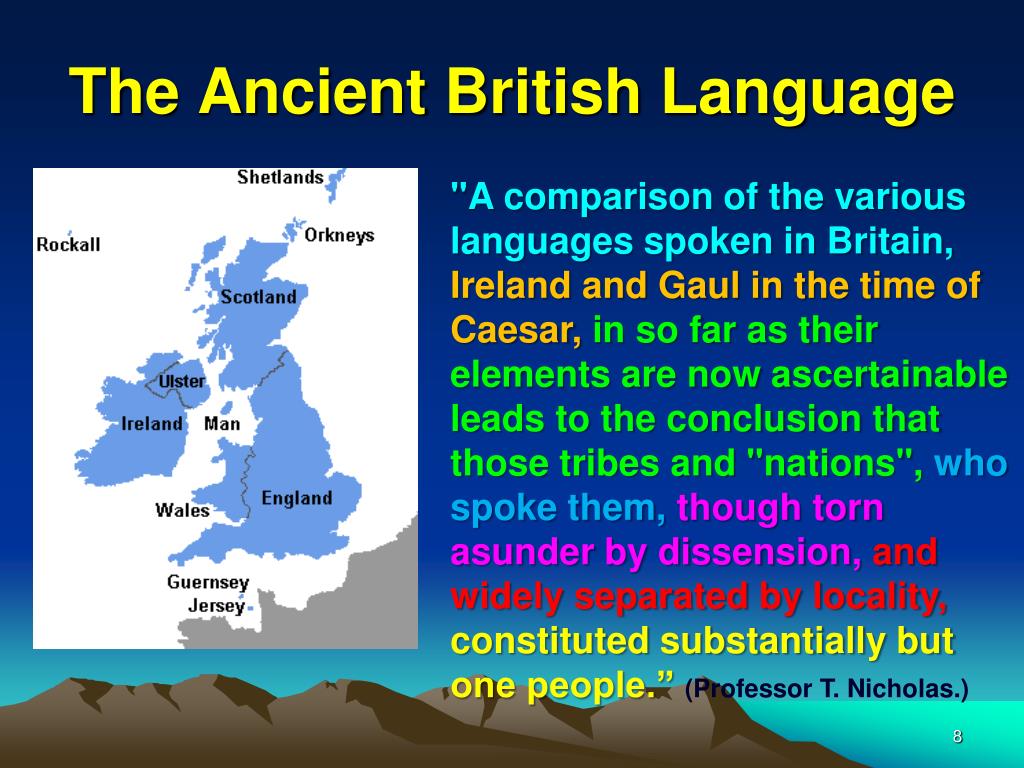

Language identification is the task of determining the language which a. The experimental results show that the proposed method has an accuracy of over 90% for small texts and over 99.8% for large texts. An Assessment of Language Identification Methods on Tweets and Wikipedia Articles. Both corpora consists of UTF-8 encoded text, so the diacritics could be taken into account, in the case that the text has no diacritics only the stop words are used to determine the language of the text. Working with over 100 countries, the OECD is a global policy forum that promotes policies to improve the economic and social well-being of people around the. We have tested our method using a Twitter corpus and a news article corpus. The languages taken into account were romance languages because they are very similar and usually it is hard to distinguish between them from a computational point of view. This method was chosen because stop words and diacritics are very specific to a language, although some languages have some similar words and special characters they are not all common. We propose different approaches that combine the two dictionaries to accurately determine the language of textual corpora. AbbVie pharmaceuticals combines advanced science with expertise to make strides in drug and treatment discovery, making a remarkable impact on peoples. In this paper we present a statistical method for automatic language identification of written text using dictionaries containing stop words and diacritics. But hey, Edésio, there seems to be something wrong with your browser.īR You're browser needs to support UTF-8 correctly in HTML forms if you want to edit non-ASCII on this wiki.Automatic language identification is a natural language processing problem that tries to determine the natural language of a given content. I think I fixed it, such is the power of backup. Could someone's browser have fudged it when saving changes? compare "ch" in de, en, es, fr.Īnother thing: the "special" characters in de, es, fr and it looked OK the first time I viewed this page. RS: Sure, but if they help to raise the score. LES: The strings for Portuguese are a good start, but I don't agree with some of them because they can easily be found in other languages, like 'nt'. JCG: Corrected a few typos in some Spanish sentences. The "feature substrings" are collected in a paired list: The sum of such hits is the score, and the language(s) with highest score is the result. If they're very characteristic, they may be specified two or more times, and thus score more. These may be characteristic single letters, word parts, or even frequent words.

The idea was to collect typical substrings for each language, and see how often they occur in the string in question. So I concentrated on languages using the Latin alphabet, mostly European, but with some Malay thrown in for fun (or rather trouble, to make things more difficult). Languages like Arab, Greek, Hebrew, Japanese, Korean can be easily identified by their specific alphabet Unicodes. en for English, de for German) of its language is returned.

We distribute two versions of the models:, which is faster and slightly more accurate, but has a file size. These models were trained on data from Wikipedia, Tatoeba and SETimes, used under CC-BY-SA.

Language identification qwiki how to#

In this fun project I want to find out how to let Tcl do language identification, so for a given string the ISO code (e.g. We distribute two models for language identification, which can recognize 176 languages (see the list of ISO codes below). Richard Suchenwirth - Of the thousands of languages in the world, we usually know one very well, a few more or less, others we at least can identify when we see them, and still others leave us in the dark.

0 kommentar(er)

0 kommentar(er)